As enterprises embrace cloud-native technologies, the software landscape is evolving at a rapid pace. Architectures built on microservices, containers, and serverless computing are now standard in delivering fast, scalable, and resilient applications. However, with this evolution comes the challenge of ensuring consistent quality across highly dynamic and distributed systems.

Cloud-native testing addresses these challenges by enabling continuous validation in environments that scale automatically and change frequently. Unlike traditional QA methods, which rely on fixed infrastructures and manual execution, cloud-native testing integrates seamlessly into CI/CD pipelines and leverages automation, observability, and infrastructure-as-code principles. It ensures that every component, no matter how ephemeral or decoupled, meets performance, security, and reliability standards.

For organizations operating at scale, testing must adapt to modern deployment patterns-validating not only code correctness but also system behavior under real-world, production-like conditions. A strong cloud-native testing strategy ensures that quality is not an afterthought, but an integral part of the delivery pipeline.

What is Cloud-Native Testing?

Cloud-native testing refers to a modern approach to software quality assurance that aligns with cloud-native application design and deployment principles. It is specifically tailored for applications built using technologies like microservices, containers (e.g., Docker), container orchestration platforms (e.g., Kubernetes), and serverless computing frameworks (e.g., AWS Lambda, Azure Functions, Google Cloud Functions).

Unlike traditional monolithic systems-where applications are deployed as a single, unified codebase-cloud-native applications are distributed across multiple services and often run in ephemeral environments that scale up and down automatically. This architectural shift requires a testing methodology that is equally dynamic, automated, and deeply integrated into the development lifecycle.

Key Characteristics of Cloud-Native Testing:

- Designed for Distributed Systems : Cloud-native testing is built to handle loosely coupled services that communicate over networks and may be deployed independently. It must account for service dependencies, latency, data consistency, and network resilience.

- Infrastructure Aware : Tests are executed in real or simulated environments that reflect the production infrastructure, often leveraging containers or cloud-based test clusters. This ensures more accurate and actionable test results.

- Automation-Centric : Manual testing is not scalable in cloud-native ecosystems. Automated testing-unit, integration, end-to-end, and performance-is embedded directly into CI/CD pipelines to support rapid and continuous releases.

- Observability-Driven : Since many services are short-lived and logs may be distributed across environments, cloud-native testing relies heavily on real-time metrics, logging, and tracing tools to validate system behavior during and after deployment.

- Environment-Independent and Scalable : Cloud-native tests are designed to run consistently across staging, testing, and production environments. They can scale with demand and adapt to dynamic provisioning of resources.

In essence, cloud-native testing is not just a set of tools or scripts-it is a strategy that evolves with the system architecture. It requires QA teams, DevOps engineers, and developers to collaborate closely, ensuring that testing is an integral part of the development process from the very beginning.

By embracing this approach, organizations gain faster feedback, higher confidence in deployments, and stronger system resilience-allowing them to innovate quickly while maintaining high standards of quality and reliability.

Testing in Serverless Environments: Unique Challenges and Considerations

Serverless computing has transformed how modern applications are designed, deployed, and scaled. In this model, applications run in stateless, event-driven compute containers managed entirely by cloud providers such as AWS, Azure, or Google Cloud. Developers no longer provision or manage servers—instead, they write functions that are triggered by specific events (e.g., HTTP requests, database updates, file uploads).

While this architecture accelerates development, reduces operational overhead, and offers virtually unlimited scalability, it also presents unique challenges for quality assurance professionals.

Key Testing Considerations in Serverless Architectures:

- Ephemeral Nature of Services : Serverless functions typically run in short-lived containers that spin up and shut down based on demand. This transient behavior makes traditional testing approaches-especially those dependent on persistent environments-ineffective. Test strategies must accommodate functions that may not exist outside of their execution window and ensure that all paths are exercised dynamically.

- Event-Driven Execution : Unlike traditional applications with clear user interactions or API calls, serverless systems are triggered by asynchronous events-ranging from database changes to third-party API calls. Effective testing in this model involves simulating real-world triggers and complex workflows across multiple services. Test suites must validate both functional correctness and the orchestration of events across distributed systems.

- Limited Infrastructure Control : One of the defining characteristics of serverless is the abstraction of infrastructure management. QA teams have no direct access to the underlying servers or runtime configurations. As a result, reliance on traditional monitoring and debugging techniques is limited. Instead, teams must depend on cloud-native observability tools-like distributed tracing, structured logging, and real-time metrics-to understand performance, detect anomalies, and validate system behaviour.

- Cold Starts and Performance Variability : Since serverless functions may be spun up on-demand, they often suffer from "cold start" latency. Testing must assess performance across warm and cold executions, especially for latency-sensitive use cases.

- Third-Party Integration Complexity : Many serverless workflows integrate external services (e.g., cloud storage, messaging queues, databases). These interactions must be mocked, emulated, or tested in staging environments that closely mimic production to ensure consistent behaviour across environments.

The Bottom Line

Testing serverless applications requires a shift in mindset—from traditional infrastructure-dependent practices to dynamic, cloud-integrated workflows. Successful QA in serverless environments means designing test strategies that are event-aware, resilient to environmental changes, and focused on behaviour rather than infrastructure.

By embracing these testing principles, organizations can unlock the full benefits of serverless architecture—rapid innovation, cost savings, and elastic scalability—without sacrificing quality or reliability.

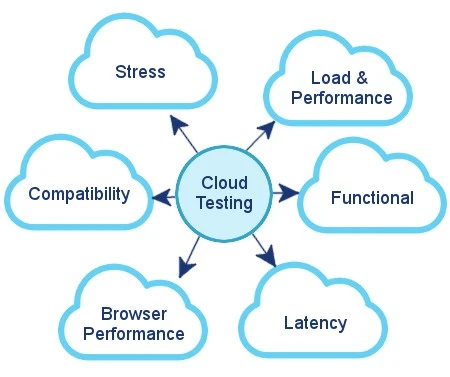

Types of Testing in Cloud Environments

The following testing types are essential to ensure the performance, compatibility, and functionality of applications deployed in cloud-native architectures. Each plays a unique role in verifying that systems operate efficiently across distributed environments.

- Load & Performance Testing : Cloud applications must handle variable user loads across geographies and time zones. Load testing simulates high-traffic conditions to evaluate how the application performs under stress, while performance testing measures response time, throughput, and scalability. This helps ensure your app remains fast and stable, even at peak usage.

- Functional Testing : Functional testing ensures that cloud-hosted applications behave as expected. This includes validating APIs, workflows, input/output logic, and user scenarios across multiple environments. Automation frameworks are often used to streamline this process in CI/CD pipelines.

- Latency Testing : In cloud computing, latency can vary depending on server location, user location, and internet routing. Latency testing identifies delays in data transmission, ensuring the system responds in real-time or within acceptable limits—especially important for financial apps, video conferencing, or real-time dashboards.

- Browser Performance Testing : Applications hosted in the cloud are accessed via various browsers and devices. Browser performance testing checks if your app performs consistently across Chrome, Firefox, Safari, Edge, and others. It ensures that front-end elements load efficiently, regardless of the platform.

- Compatibility Testing : Cloud applications must be compatible across operating systems, devices, networks, and browsers. Compatibility testing validates that the application functions seamlessly across this wide variety of configurations, preventing user-side disruptions.

- Stress Testing : Stress testing evaluates system behavior under extreme conditions—such as heavy traffic, reduced infrastructure, or sudden failovers. This helps QA teams assess application resilience and prepare for unexpected spikes or infrastructure failures.

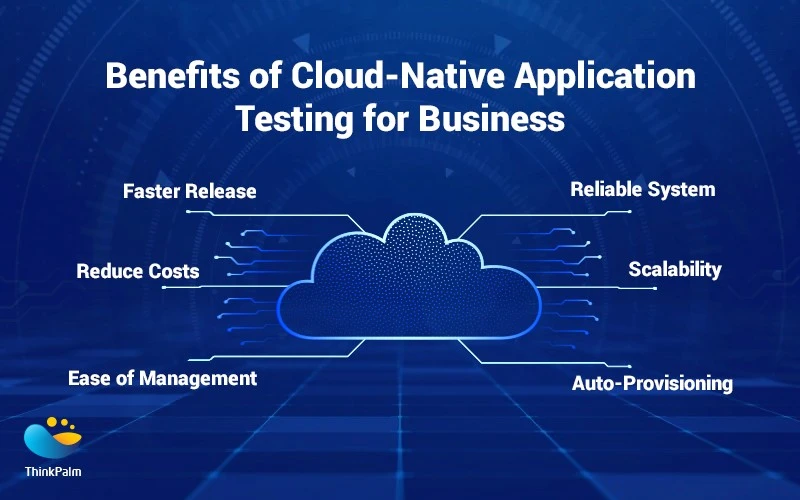

Benefits of Cloud-Native Testing

While cloud-native architectures introduce complexity, they also unlock powerful advantages for modern QA practices. Testing in cloud-native environments isn’t just about keeping up—it’s about testing smarter, faster, and more effectively at scale.

1. Scalability

Cloud-native testing supports elastic scaling. Whether you're testing a microservice in isolation or running end-to-end scenarios across multiple containers, your automated test suites can scale alongside the application itself—on-demand and without manual provisioning. This makes it possible to:

- Run parallel test executions across thousands of containers

- Handle sudden load increases in performance testing

- Optimize resource usage based on real-time demand

2. Real-World Simulation

Cloud testing environments can closely mirror real-world production conditions. By testing in distributed, cloud-based staging environments, teams can:

- Emulate global user traffic

- Simulate varying latency or regional performance

- Replicate infrastructure components such as load balancers, APIs, and third-party integrations

This level of realism helps uncover issues that traditional lab-based testing environments

3. Faster Feedback Loops

When integrated with CI/CD pipelines, cloud-native testing enables shift-left testing—where bugs are caught early in the development lifecycle. Automated tests run continuously with every code commit, pull request, or build, providing:

- Near-instant feedback to developers

- Quicker resolution of defects

- Shorter release cycles with greater confidence

This speed transforms quality assurance from a bottleneck into a catalyst for innovation.

4. Improved Resilience with Chaos Testing

Cloud-native platforms support advanced practices like chaos engineering and fault injection, allowing testers to deliberately introduce failures (e.g., API timeouts, pod crashes, network throttling) to observe how the system responds.

Benefits include:

- Early detection of single points of failure

- Validation of fallback mechanisms and error handling

- A more resilient, self-healing architecture

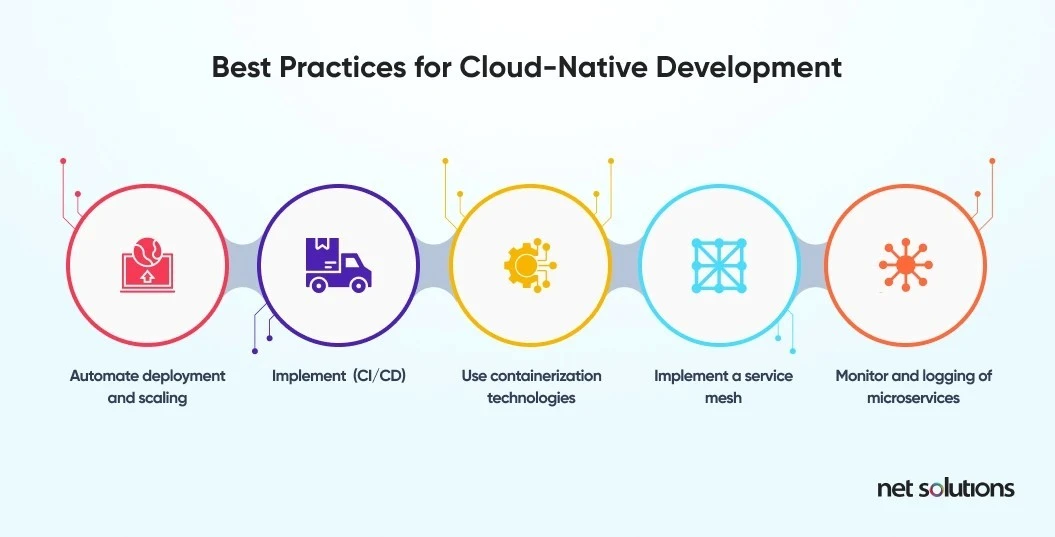

Best Practices for Cloud-Native Testing

Testing in cloud-native environments requires a strategic approach that aligns with the distributed, dynamic, and automated nature of modern software systems. Below are essential practices to ensure quality, performance, and resilience in a cloud-native world.

a. Shift-Left Testing

Move testing earlier in the software development lifecycle. Embedding unit, API, and integration tests during the build stage helps identify defects early and reduce the cost of fixing issues. This approach promotes faster feedback for developers and ensures higher code quality from the start.

b. Test Automation Across the Stack

Automate testing at every layer—unit, integration, end-to-end, performance, and security. Automation is critical in dynamic cloud environments where manual testing cannot keep pace with deployment speed. Automated tests should be integrated into the CI/CD pipeline to ensure consistent and repeatable results.

c. Environment Parity Using Containers

Use containerized environments such as Docker and Kubernetes to ensure your test environments closely resemble production. This reduces configuration drift and improves the reliability of test results. Infrastructure as Code (IaC) can be used to provision consistent environments across development, testing, and production.

d. Observability and Monitoring Integration

In cloud-native testing, visibility into system behavior is essential. Incorporate logging, metrics, and distributed tracing into your test processes. These observability tools help diagnose failures, monitor performance, and understand system behavior during testing and in production-like simulations.

e. Chaos Engineering for Resilience

Introduce deliberate failures to test the system’s response. Chaos engineering helps validate your application's ability to recover from unexpected disruptions. Simulate outages, latency, or service crashes to ensure the system degrades gracefully and recovers effectively.

f. Cross-Region and Multi-Cloud Testing

If your application is deployed across multiple regions or cloud providers, it is important to validate functionality, performance, and availability across all environments. Simulate real-world traffic patterns and regional failovers to ensure global reliability.

g. Security Testing as a Core Component

Security should be built into every phase of testing. Conduct automated vulnerability scanning, penetration testing, and identity validation. Test APIs, container images, and third-party services for potential security flaws, especially in dynamic environments with frequent updates.

Integrating Testing into CI/CD Pipelines

In cloud-native development, continuous testing is a critical part of CI/CD.

Key Steps:

- Trigger unit tests during every code commit

- Run integration tests with every pull request

- Deploy to staging environments automatically

- Run regression and load tests pre-release

- Moitor production with canary testing and real-time feedback

This allows for rapid releases without compromising on quality.

Future of QA in a Cloud-Native World

As organizations continue moving toward cloud-native maturity, QA will evolve in the following ways:

- Test-as-Code: Defining test cases in code repositories with version control and collaboration.

- AI-Driven Testing: Using machine learning to detect patterns, predict failures, and auto-generate tests.

- Serverless CI/CD: Entire test pipelines will run on serverless frameworks with cost-effective scaling.

- Self-healing Tests: Systems that auto-correct failed test cases due to environment drift or test flakiness.

Testing will shift from just validating features to ensuring resilience, observability, and agility.

Conclusion

Cloud-native testing is more than just adopting new tools—it's a cultural and strategic shift in how software is validated in modern environments. In a world where applications are distributed, event-driven, and constantly evolving, quality assurance must be equally agile.

By embracing automation, observability, continuous integration, and resilience testing, businesses can ensure their applications are not just functional, but truly cloud-ready.